Nullmax's Vision-Only Tech: Eyes for Autonomous Driving and Robotics to Explore the World

Date: 2024-09-03Driving with human-like abilities has long been seen as the key to realizing autonomous driving. Since vision is the most important sense for humans to perceive their environment, it is similarly vital for autonomous vehicles (AVs). Consequently, “vision-only” technology, which does not use LiDAR or millimeter-wave radar to perceive a vehicle's surroundings, is considered a core capability not only for AVs but also for the next generation of robotics.

By leveraging AI and cameras, machines can recognize colors, shapes, textures, and spatial relationships in the same way humans do, becoming accurately aware of their surroundings. For example, an AV uses vision to identify curbs and fences along the road, differentiate between various types and colors of lane markings, and detect pedestrians, vehicles, cones, barriers, traffic signs, and more. Additionally, it can directly output actions.

Vision-only technology, shows excellent capabilities in comprehending and interacting with the physical world, brings endless possibilities for autonomous driving.

Vision-only "Masters" All-Scenario Autonomous Driving

How would the physical world observed by a vision-only autonomous vehicle look? This is Nullmax vision-only AV equipped with 7 cameras, transitioning from one road to another, showcasing the output from its end-to-end autonomous driving model.

The video displays Nullmax's testing vehicle moving forward in the left lane, it accurately detects a newly separated left-turn lane before the intersection, and the vehicle then smoothly changes lanes and enters the intersection. Meanwhile, vehicles in the oncoming lane are turning right into the same target lane. Nullmax's vehicle merges smoothly into traffic at the appropriate moment, enters the correct lane, maneuvers around a bus parked partially on the road, yields for a vehicle to make a U-turn from the roadside, and finally completes the entire turning process.

In this video, the red dots outline the road boundaries such as curbs and fences, while the blue and green dots depict solid and dashed lines. The light blue dots represent stop lines, and the yellow line indicates the planned driving trajectory. Various colored boxes of different sizes represent different obstacles: red for small vehicles, sky blue for scooters and motorcycles, purple for bicycles, yellow for pedestrians, and green for large vehicles.

These simple dots, lines, and boxes demonstrate the outstanding scene comprehension capabilities of Nullmax's end-to-end autonomous driving model, particularly its ability to understand complex road structures. Without relying on maps for road attributes and topology, a vehicle equipped with Nullmax's autonomous driving technology can use AI to infer all necessary surrounding information in real-time by using cameras. This allows Nullmax's AVs to handle any driving scenario and navigate any road on this planet, without geographical restrictions.

Promising & Interpretable: Nullmax's Vision-Based End-to-End System

Nullmax's end-to-end autonomous driving model primarily uses visual information as input. Equipped with only cameras and guided by simple navigation instructions like left and right turns, it can achieve autonomous driving in urban areas.

The end-to-end model functions like a human brain, processing the inputs of visual signals to understand the surroundings and plan actions, including both dynamic and static perception tasks, and directly outputting driving behaviors. For instance, it can merge into the correct lane in a split-lane scenario at an intersection, make turns in narrow spaces with fences on both sides, and interact flexibly with obstacles.

Moreover, the simplicity of the sensor setup results in relatively low computational power requirements. Nullmax’s autonomous driving solution for urban scenarios operates with less than 100 TOPS of sparse computing power, enabling affordable vehicles to have safe and intelligent driving without the need for expensive sensors or chips.

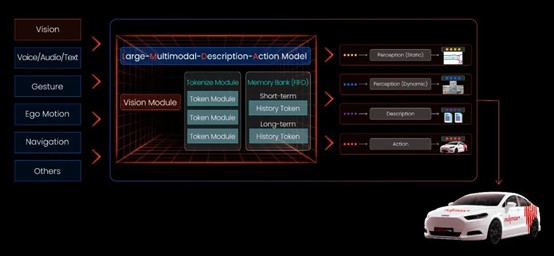

However, end-to-end learning-based autonomous driving systems are often considered black boxes due to the lack of interpretability in their outputs. To address this, Nullmax’s end-to-end system provides multimodal outputs that help explain its results. For instance, Nullmax’s model can simultaneously output dynamic perception, static perception, scene description, and action, offering a clear and comprehensive interpretation of the model's results.

The Nullmax’s new generation end-to-end autonomous driving technology incorporates multimodal model, enabling autonomous driving across all scenarios with high performance while maintaining interpretability.

Technical Reserve: Nullmax's Vision Research at Academic Conferences

For human drivers, the primary task is to "watch the road," and the same applies to autonomous driving, this ability includes understanding complex road structures and recognizing lane markings.

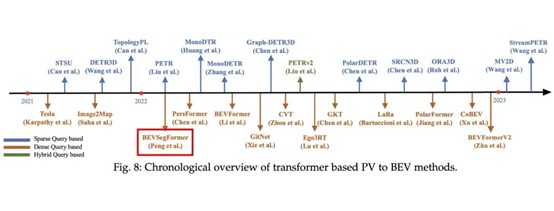

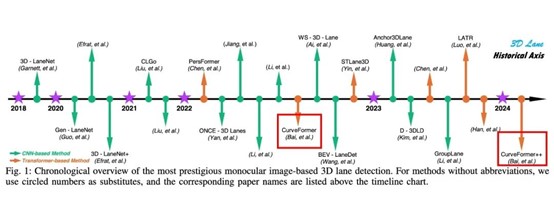

In 2022, Nullmax published the BEVSegFormer, a method for semantic segmentation and generating local maps in real-time with any camera configuration, and CurveFormer, an efficient method for 3D lane detection without explicitly constructing BEV (Bird's Eye View), both of which were accepted by prestigious international conferences WACV and ICRA.

According to the paper titled “Vision-Centric BEV Perception: A Survey”, published in IEEE TPAMI, a top-class academic journal in computer vision, Nullmax’s BEVSegFormer is among the earliest publicly available representative studies on BEV perception worldwide.

In the realm of monocular 3D lane detection, another article titled "Monocular 3D Lane Detection for Autonomous Driving: Recent Achievements, Challenges, and Outlooks" highlights Nullmax’s CurveFormer and its 2024 upgrade, CurveFormer++, as pioneering works in this area.

By integrating these advanced AI research into its end-to-end autonomous driving model, Nullmax’s vision-only AVs can understand road structures in real-time across various scenarios, without any map information.

Additionally, Nullmax presented two other vision-based object detection studies at top-tier international computer vision conferences, CVPR and ECCV, this year. One paper introduces QAF2D, a method that enhances 3D object detection with 2D detection, while the other, SimPB, proposes a single model for 2D and 3D object detection from multiple cameras. By tightly integrating the strengths of image space and BEV space detection, Nullmax significantly improves the range, accuracy, and stability of vision-only obstacle detection.

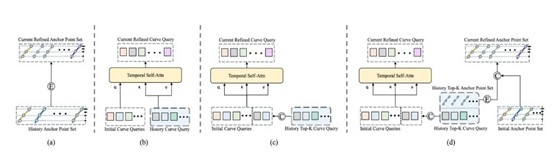

Building on the foundation of vision-only perception, Nullmax has further explored the integration of temporal information, enabling the entire autonomous driving network—including static perception, dynamic perception, and planning—to have "memory," like a human's. In February of 2024, Nullmax introduced an upgraded method, CurveFormer++, which explores sparse Query temporal fusion, comparing four different temporal fusion methods.

For autonomous driving, selecting the most effective temporal information to use is crucial, the challenge lies in balancing the amount of memory used—too much, and the hardware may not be able to store or process it efficiently; too little, and the performance gains are minimal. Nullmax’s sparse Query temporal fusion approach ensures that temporal information is effectively applied to the AI model, yielding excellent results without requiring excessive computational resources.

Years of research and development in vision have enabled Nullmax to establish a strong technological advantage in end-to-end autonomous driving and the deployment of vision-only solutions.

Nullmax firmly believes that vision-only is a necessary, core capability for autonomous driving. Not only can it independently accomplish a wide range of tasks and functions, but it is also key to achieving fully autonomous driving and embodied intelligence.

Media Relations

media@nullmax.aiRelated Articles

- Nullmax and Renesas Forge Strategic Partnership to Deliver Competitive ADAS Solutions for the Global Market 2025-02-25

- Nullmax Launches 'Nullmax Intelligence': End-to-End Autonomous Driving Technology 2024-07-16

- Explore Autonomous Driving with MLF: More Efficient and Easier to Scale-Up 2024-06-03

- Nullmax Introduces New Vision Technologies to Boost Self-Driving Performance, Delivering Exceptional Mobility Experience 2024-05-14

- Nullmax Upgrades BEV-AI Architecture of Self-Driving to Build End-to-End Solution for High-Level Autonomy 2024-03-28